Computer systems should not harm humans in any way — and that includes not embarrassing people or making them feel bad. Back in 1942, Isaac Asimov made this do-no-harm principle his First Law of Robotics, but we still haven’t achieved this fundamental state of not being harmed by our computers. Despite amazing achievements in digital technologies, many systems never arrive at user delight, and many companies still release usability disasters.

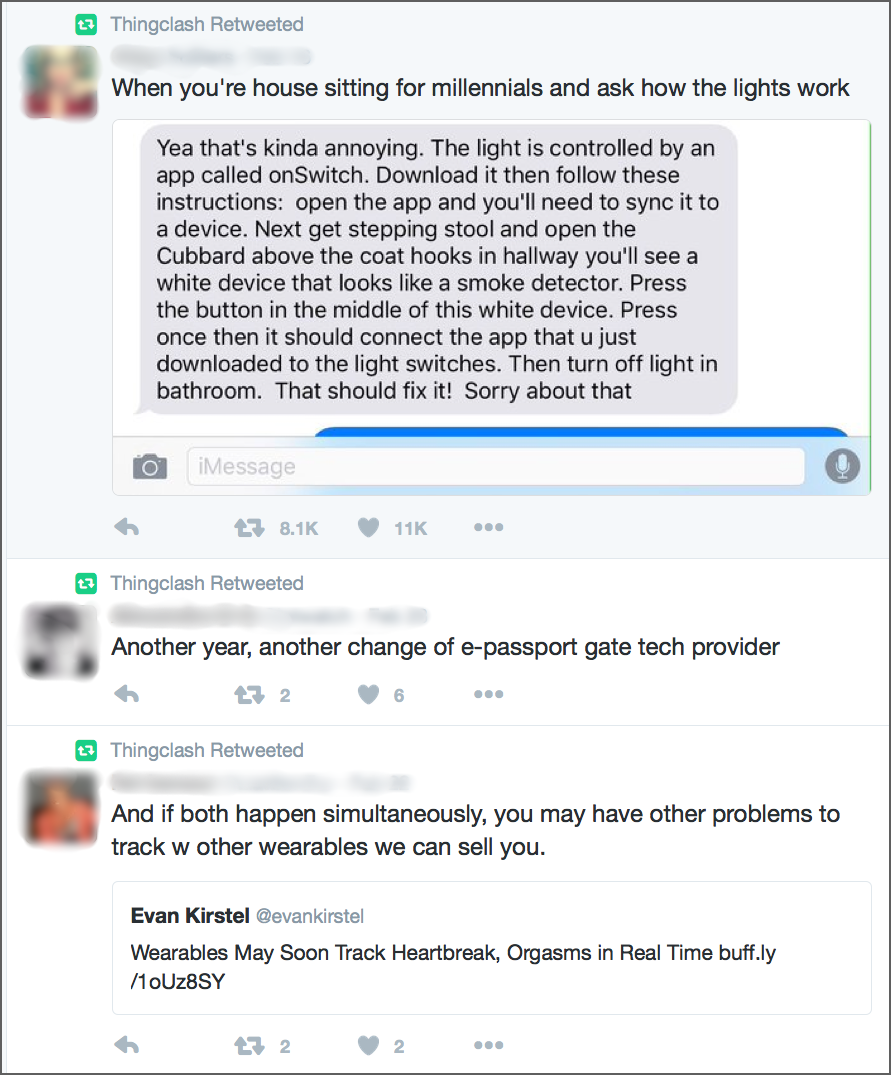

It’s not enough to have systems that function according to specifications. And it’s no longer enough to have systems that are usable. A plethora of smart devices have invaded our world and inserted themselves in almost every context of our existence. Their flaws and faulty interactions are no longer only theirs — they reflect badly on their users and embarrass them in front of others.

In other words, by making smart devices ubiquitous, we’ve exposed ourselves to computer-assisted embarrassment. We must expand our usability methods to cover not only the isolated user in one context of use, but also the social user, who interacts with the system in the presence of others, and the communities of users that use systems together.

In the early days of computing, people were content to simply run programs on their computers. Usability was closely tied to functionality and understandability. As digital systems matured and became more capable, concerns for ease of use grew into the field of user-experience design, which aims to improve people’s experiences before, during, and after computer use. Attention to task completion and user satisfaction expanded into caring about many other aspects of human-product-company interactions, such as measures of quality, people’s perceptions of value, and their emotional responses to technology.

Computers and smart devices have become social actors. We talk to them, they reply, and they act on our behalf, representing us in communications and commerce. Digital assistants lurk in living rooms, hanging on every word, but they often misinterpret people. As systems get smarter, we’ll expect them to be more helpful and better behaved. We expect our software to be truthful, trustworthy, and polite to us.

User-experience design must also be concerned with social user experience: the situated experiences with technologies in the context of groups of people. We need to understand how the behaviors of what we design fit or do not fit with families, work environments, school cultures, and play.

Dinner, open-plan offices, sporting events, and church services all require different behaviors from both our digital systems and us. Some systems fit more politely into our contexts, for example by offering settings for Meeting mode or Do Not Disturb mode that can override normal settings in predictable ways. These modes can be quite helpful if we understand their consequences, know which mode we’re in, and remember when to switch.

Some systems go further: for example, they predict what people want by using sensors to detect motion and using information from clocks, maps, and calendars to guess intentions or contexts of use. Some systems even learn from people’s actions.

Some have default settings that cause embarrassment, however, such as systems that publicly report your most private activity as exercise or automatically turn off the phone ringer just when you’re expecting a late-night call.

Social Embarrassment

There are countless examples of computer-assisted embarrassment. Here are just a few of the typical classes of problems. You could probably add many of your own excruciating moments to this list:

- Communication disasters: Messaging systems often make it easy to include unintended recipients or messages, or to send from an unintended address. Group SMS messages often end in embarrassment for everyone when messages thought private are distributed to the whole group.

- Privacy leaks: Systems often expose, share, or don’t really delete private information (such as photos, names, and locations), sometimes without you becoming aware of the problem until much later. Wireless microphones and cameras that you think are off or muted may actually be transmitting private moments live.

- Terrible timing: For those not using 24-hour clocks, alarms and appointments may default to 2 am instead of 2 pm. Calendars may invite people to 1-minute meetings. Unwanted sound effects can spoil quiet moments, such as volume-change sounds that intrude awkwardly into recordings and meetings. Personal messages tend to pop up during formal presentations.

- Aggressive helpfulness: Autocorrect or other interface features may unexpectedly embarrass you in front of colleagues. Algorithms that add your enemies to your contacts or speed-dial lists may cause distress or even danger.

- One-size-fits-all alerts: Apps and services often spam your social-media contacts with automated messages. Notifications may make noise on all your devices. Public alerts from 800 miles away can wake up everyone in your household in the middle of the night. Relentless, intrusive upgrade notifications sometimes won’t take “No thanks” for an answer, even when some technical or policy issue prevents you from ever saying yes.

- Systems designed for only one gender, ethnic group, or set of abilities: Image search that does not recognize black people, voice-recognition systems that don’t work for some dialects, and devices that don’t work unless you can use both hands are just a few of the ways design has gone terribly wrong because teams design systems for people just like themselves. The first law of interface design is: You are not your user. These systems are an embarrassment to their creators, but, more important, they also cause harm and embarrassment to the people who have been left out of the design specifications.

Algorithms that are not specific or accurate enough can be a big source of embarrassment. For example, LinkedIn combined a Jewish socialist’s profile photo with news of a white supremacist’s political activity in an automated email announcement and sent it to the wrong guy’s social network — simply because both people had the same name. According to a Slate article about this incident, LinkedIn knew that it had a sloppy piece of software, but used it anyway, relying on readers to submit errors after the fact. The Will Johnson who wrote the Slate article wonders how many people may erroneously be declared dead using similar methods. In a professional network, extreme embarrassment can feel fatal even it only kills your employment prospects.

Mapping out unintended and potentially harmful user consequences (and how to avoid and mitigate them) should be part of every system’s design plan. Start by asking yourselves, “What could possibly go wrong?”

Awkward Phone Moments

Mobile phones have introduced a number of new ways for technology to embarrass us. Here are just a few examples.

Some phones make accidental calls, from inside your pocket or bag or when you just want to look at contact information, and they sometimes don’t hang up when you’re finished with a call.

When you are talking on the phone, nearby people might think you are talking to them. If that happens, you’ll have to apologize to both the person with whom you intended to talk and to those with whom you didn’t, and you’ll likely cause embarrassment for everyone involved.

Sometimes mobile boarding passes disappear before you can use them at the airport. Should you look for them in your email attachments, in an app, in a browser download area, or are they images in your camera app? Keeping track of where the boarding pass belongs is an additional memory burden and increases people’s cognitive load. Sometimes you have to go through the online check-in process again while at the gate, just to display the barcode in order to board the plane. No pressure. We’ll all wait patiently while you do that.

Airline customer-service reps on Twitter get complaints from angry travelers with disappearing mobile-phone boarding passes.

Fortunately, airlines and travelers are learning to compensate for these issues, by providing better instructions, using images, and going back to print.

Phone banking or mobile voting, anyone? How about phone passports and phone driver licenses? The consequences of failure are getting more and more severe all the time.

Examples of Good Contextual Usability

Some systems already exhibit contextual-UX smarts that can help prevent embarrassment:

- Gmail warns you if you mention an email attachment in a message but don’t attach one.

- Some Android phones can tell you if you’re going to arrive at a closed business.

- Some automobiles make it difficult to lock yourself out or to accidentally leave car doors unlocked.

- Some messaging systems make it simple for you to add appointments to your calendar as soon as you agree to do something.

Social Defects Cost Money, Too

Increasingly, people gauge ease of use against the best designs they’ve seen: a mediocre experience seems half empty rather than half full. When a product defect causes problems, a customer may go from being a word-of-mouth advocate to a writer of long-lasting, negative reviews.

Whenever computer systems aren’t well behaved, they reflect poorly on those who designed them. Many of the unexpected things that can happen through interactions with our not-smart-enough systems can be deeply embarrassing or otherwise make us feel bad. Those emotional regrets may last a long time because of how people tend to chain bad experiences together in memory. Each humiliation reminds us of other times we felt that way. It’s enough to make you prefer paper and pen.

Companies should pay a lot more attention to the return on investment for fixing problems that users find. It’s easier to measure usability improvements for time-wasting intranets, but poor designs also cost customers time, money, and often their dignity.

Don’t underestimate the costs of humiliating customers. No one is angrier than loyal customers let down by the companies and products they once loved. Such feelings of betrayal are expensive, whether you can measure them or not.

How Problems Can Occur

Andrew Hinton, in his book, Understanding Context, explains that context collapse causes a lot of these issues: activities that are fine in one context can be completely out of place in another, causing social awkwardness or worse. Permutations of digital and physical contexts create clashes in the social norms and in the expectations we have for other people, organizations, our digital systems, and ourselves.

It’s possible that some of these social problems appear because designers and researchers did not test systems in the right context, or they tested with the wrong people. But social defects are also difficult to find with the UX methods that teams normally use. Many problems likely happen because interfaces are tested in the lab, with one user at a time, and with no understanding or no realistic simulation of the actual context, scope, or scale of use. A system may be quite usable in isolation, in prototype, before it’s installed, with small amounts of data, when seated at a desk, or by particular types of users in just the right lighting; but when it’s in use at home, by groups of people, in public, or while doing normal life activities, these socially embarrassing issues appear.

Fixing Problems After Release Can Be Difficult

If you’re relying on finding and fixing embarrassing UX problems after a system has been released, think again. It’s often too difficult for users to report problems. Some companies also find it hard to track and react quickly to desperate user complaints posted in the wild.

Many systems cannot be changed after they are released. This ominous situation is often found in technologies we rely on and use frequently, such as phones, cars, computerized devices (internet routers, garage-door openers, entertainment systems, and other appliances) and, increasingly, in internet-of-things (IoT) ecosystems.

Rebooting your car is becoming common, but upgrading its software can be a complicated process. Watching your smart home become incompetent, nonfunctional, and untrustworthy is the kind of living-in-the-future that nobody wants. Designing for ease of use in context and over time is absolutely essential.

How to Find and Fix Contextual Problems in UX Designs

- Learn from well-designed systems that make people feel smarter.

- Do field studies to observe groups of people interacting with the system, and repeat studies over time. When interviewing and surveying users, ask about their emotional reactions, including annoyances and potentially embarrassing incidents.

- Ask people to self-report their interactions and any accidents or surprises while using the system daily, over time — for example, by using diary studies and other longitudinal study methods.

- Consider system usability in wildly different contexts, such as in countries (and companies) where the political environment is completely different than in yours.

- Regardless of the intended use, think about a wide range of other use and abuse possibilities.

- Consider how a malicious user or an untrustworthy system operator might use the system to harm others.

- Consider the technology ecosystems and other products that should work well with yours. What’s most likely to break or change over time?

- Test early and often with a diverse range of people — alone and in groups.

- Make updating easy to do. Ensure that your systems can be repaired easily over time, to mitigate unforeseen problems and keep people safer.

- Plan how to keep personal information secure over time. Some things don’t belong in the cloud. Often data can’t be truly anonymized, secured, or deleted, so be very careful which information is shared by default or automatically collected from the user. Don’t collect what you can’t protect.

- Give users control of their personal information and sharing choices by making them aware of both their options and their risks.

- Consider whether contextual and social defects could create privacy, security, legal, and safety issues.

- Make it easy for anyone to report usability bugs. Look at user-reported problems for clues about expectation mismatches and unintended effects.

- Tell stories about usability problems that lay the blame where it belongs, on the system and its policies.

- Don’t suffer in silence. Complain about systems that make you and others uncomfortable or embarrassed, whether or not you are on that design team. Companies tend to fix systems that many people are unhappy with.

Social Defects in Systems Matter

Some pundits expect robots to replace half of human jobs and self-driving vehicles to take over the highways soon. In any case, the systems of the future will be more social, more powerful, and more able to cause harm. As human dependency on smart systems increases, it’s very likely that software quality and access defects will also become business liability issues, no matter what the unread disclaimer on the package says.

Given the unexpected longevity of software made decades ago, it’s easy to predict that some of the systems developed today might be around for a long time. If we want people to live happily with the systems we design, we need to prevent usability problems, including social defects, and plan to repair and update systems long after deployment.

References

Asimov, Isaac. Robot science fiction series, 38 short stories and 5 novels. Various publishers worldwide, 1939–1986.

Hinton, Andrew. Understanding Context. ISBN 1449323170. O'Reilly Media, USA, December 2014.